The FE224NV Flash Enclosure represents a cutting-edge, all-flash storage system meticulously crafted to deliver unparalleled performance with consistently low latencies. Engineered for demanding enterprise workloads encompassing Artificial Intelligence (AI), Big Data, High Performance Computing (HPC), SoftwareDefined Storage (SDS), the FE224NV stands as an end-to-end NVMe® platform.

With support for up to 24 NVMe SSDs seamlessly connected through two or four 100GbE NVMe-over-Fabric (NVMe-oF™) Ethernet ports, the FE224NV offers a robust and versatile solution. Designed for shared environments, it boasts a high availability (HA) architecture with dual IO Modules in an active/active configuration. The use of dual-port NVMe SSDs allows each IO Module to access all SSDs in the system. Redundant, hot-swappable components, including IOMs, power supplies, and cooling fans, further enhance the system‘s reliability.

Leveraging the transformative power of NVMe SSDs, this enclosure introduces NVMe-oF, the latest storage networking protocol tailored for use with NVMe SSDs. Offering performance nearly equivalent to internal NVMe SSDs, NVMe-oF combines this prowess with the flexibility and shared efficiencies of network attachment.

This groundbreaking approach allows the FE224NV Flash Enclosure to redefine direct-attached storage (DAS), outperforming traditional solutions like JBODs in terms of both performance and flexibility. Beyond this, the enclosure serves as a pivotal building block for modern IT architectures, including Storage-as-a-Service (SaaS), Software-Defined Storage (SDS), and Composable Infrastructure solutions.

Key Use Case: Accelerate AI with NVIDIA GPUDirect

As AI, HPC, and data analytics datasets continue to increase in size, the time spent loading data begins to impact application performance. Fast GPUs are increasingly starved by slow IO - the process of loading data from storage to GPU memory for processing. Using NVIDIA GPUDirect®, network adapters and storage drives can directly read and write to/from GPU memory, eliminating unnecessary memory copies, decreasing CPU overheads and reducing latency, resulting in significant performance improvements. GPUDirect Storage enables a direct data path between local or remote storage, such as NVMe or NVMe over Fabric (NVMe-oF), and GPU memory. It avoids extra copies through a bounce buffer in the CPU’s memory, enabling a direct memory access (DMA) engine near the NIC or storage to move data on a direct path into or out of GPU memory — all without burdening the CPU.

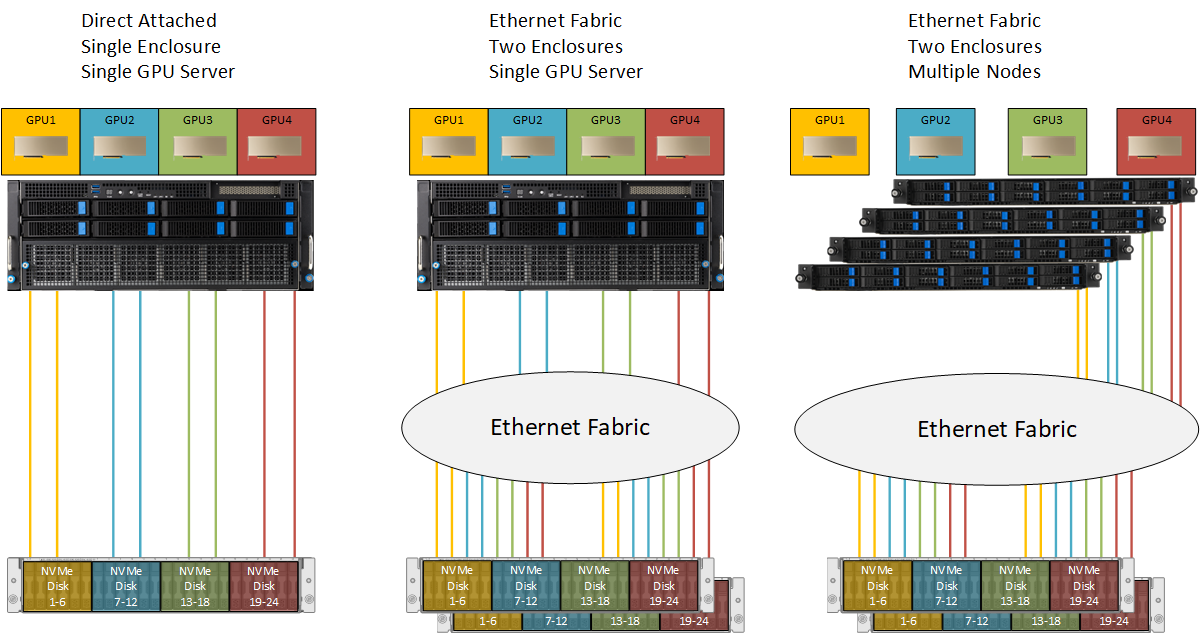

Local storage in GPU servers is insufficient to saturate multiple fast GPUs. The Zstor FE224NV expansion enclosure provides a solution for this by expanding the storage, allowing more drives to be allocated to each GPU. A single enclosure can be direct attached to RDMA network adapters, or multiple enclosures can be attached via an ethernet fabric.

Deployment Examples:

Performance Highlights:

| FE224 | IOPS Read | IOPS Write | Throughput Read | Throughput Write |

| 2-port per controller | 6 Million | 4 Million | 46 GB/s | 40 GB/s |

| 4-port per controller | 11 Million | 6.5 Million | 90 GB/s | 76 GB/s |

Available Models:

| Per Enclosure | Per Controller | ||

| Part Number | Raw Capacity | Drives | Ports |

| FE224NV-2-180 | 180TB | 12x 15TB | 2x 100G |

| FE224NV-2-360 | 360TB | 24x 15TB or 12x 30TB | 2x 100G |

| FE224NV-2-720 | 720TB | 24x 30TB | 2x 100G |

| FE224NV-4-180 | 180TB | 12x 15TB | 4x 100G |

| FE224NV-4-360 | 360TB | 24x 15TB or 12x 30TB | 4x 100G |

| FE224NV-4-720 | 720TB | 24x 30TB | 4x 100G |

Zstor FE224 All Flash NVMe Enclosure

|

|

|

|

|

| Product | Zstor FE224NV Flash Enclosure - 2U 24x 2.5“ NVMe SSD Gen4 dual-ported |

| System Type | High Available Redundant, Direct or Fabric Attached Flash Enclosure |

| Drive Bays | 24x 2.5” 15mm hot-swap U.2 NVMe Gen4 drive bays; 12x or 24x 15.36 or 30.72 TB drives for up to 720TB raw capacity |

| Shared Backplane | NVMe SSDs PCIe 4.0 dual-ported 2x2 |

| Architecture | Non-blocking architecture 48 PCIe lanes (3x 16) into each IOM with 48 lanes (24x 2) to the SSDs for a total of 96 lanes |

| Configuration | With 2x 100GbE ports: Each 100GbE port sees 12 SSDs. Drives 1-12 are connected to first port, Drives 13-24 are connected to second port With 4x100GbE ports: Each 100GbE port sees 6 SSDs. Drives 1-6 are connected to first port, Drives 7-12 are connected to second port, Drives 13-18 are connected to third port Drives 19-24 are connected to fourth port |

| Management Port | per IO Module 1 GbE RJ-45 management port |

| Network Controller | 2 or 4x 100GbE ports QSFP28 per IO Module for NVMe-oF direct/fabric attachment |

| System Management | IPMI 2.0 compliant AST2500 BMC with IPMI and Redfish |

| Cooling | 6x hotswap fans, 5+1 redundant |

| Power supplies | 1600W hot-swappable, 1+1 redundancy |

| Dimensions/Weight | Rackmount 2U, 19“ 438mm (W) x 88mm (H) x 697mm(D), 22.8 kg |

| Environmental | Temperature: 0 to +35 degree C, Humidity: 20 to 80% |

| Compliance Standard | CE, FCC Class A, RoHS |

| Warranty | 3 year warranty |

For further information please contact the Zstor Sales Team via e-mail: