GPU-Accelerated Liquid-Cooled Solutions

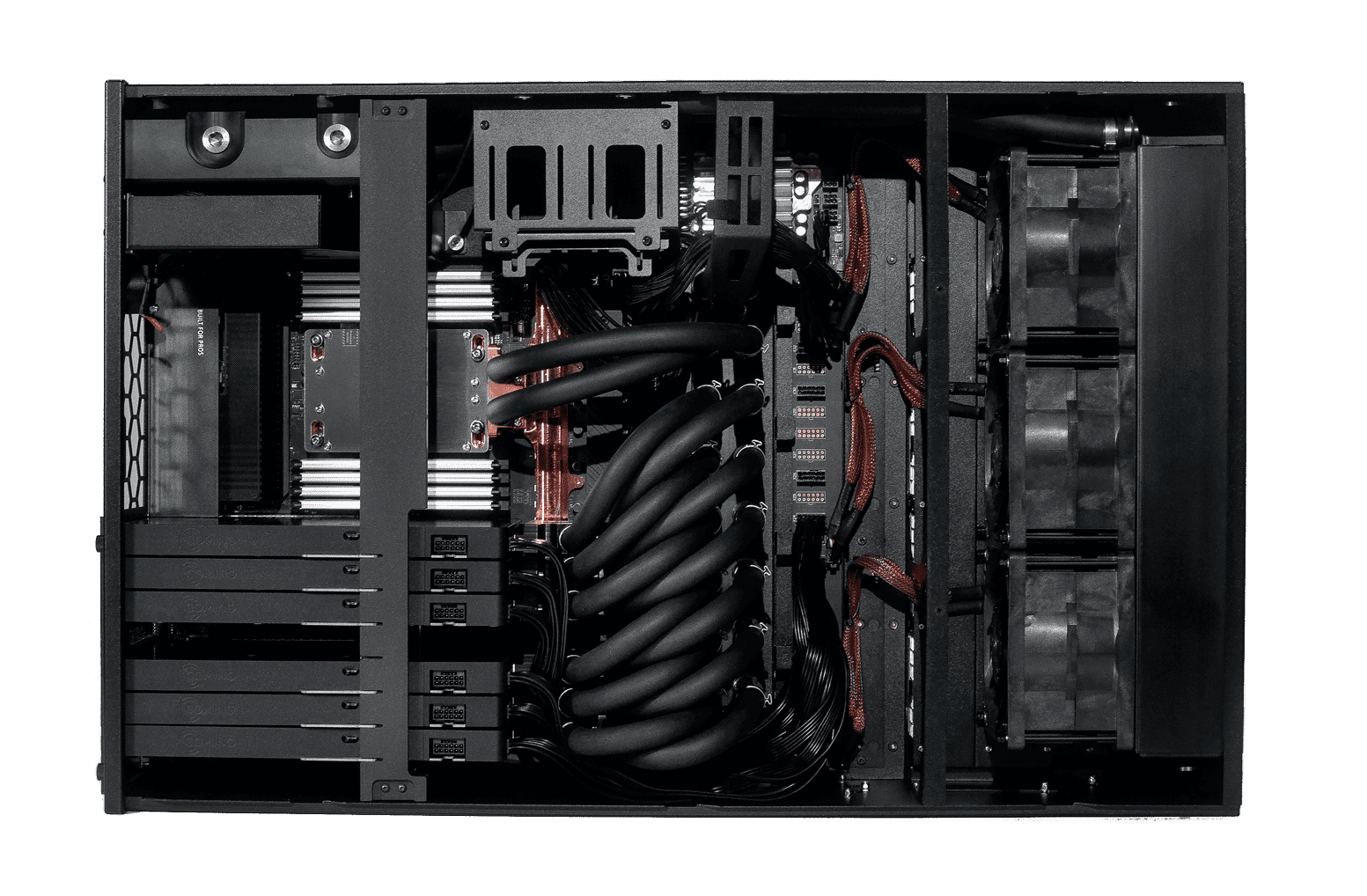

Comino Grando AI INFERENCE server is a unique and most cost-effective solution hosting SIX liquid-cooled NVIDIA 4090 GPUs with 24GB of GDDR6X Memory each, which is considered a sweet spot for the majority of real-life inference tasks. Efficient cooling eliminates any thermal throttling providing up to 50% performance overhead over similar air-cooled solutions. In addition to unexcelled performance Comino solutions come with up to 3-years maintenance-free period, maintenance as easy as air-cooled systems and remote Comino Monitoring System (CMS) ready to be integrated into your software stack via API.

GRANDO

GRANDO

Inference Product Line

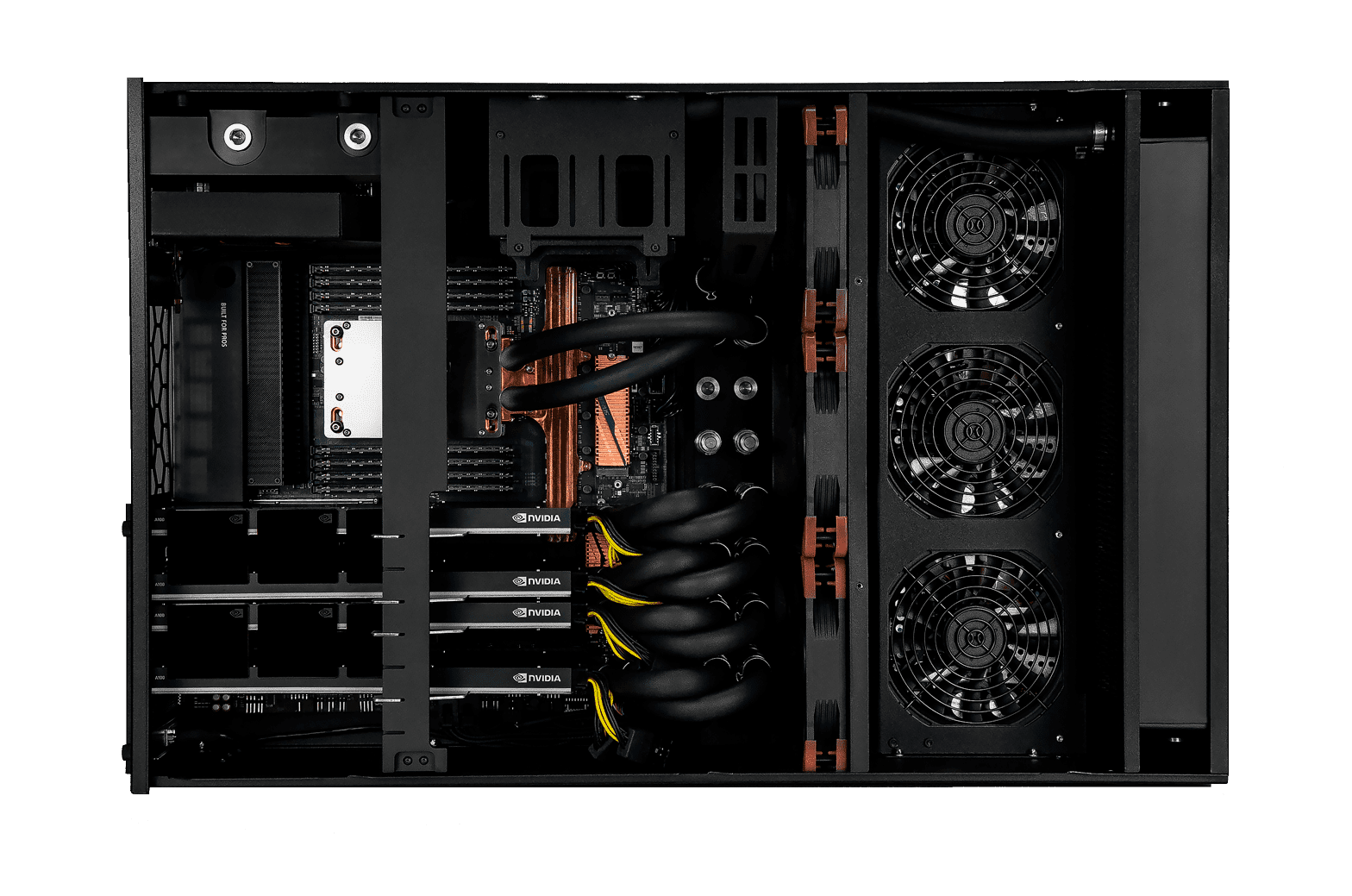

Grando AI INFERENCE servers are pre-tested to run cuDNN, PyTorch, TensorFlow, Keras, JAX frameworks & libraries and equipped with SIX NVIDIA GPUs (A100 / H100 / L40S / 4090) paired with the most modern high-frequency multi-core CPUs to provide best in class Inference performance and throughput for the most demanding and versatile workflows.

Comino Grando AI INFERENCE servers are designed for high-performance, low-latency inference and fine-tuning on pre-trained machine learning or deep learning Generative AI models like Stable Diffusion, Midjourney, Hugging Face, Character.AI, QuillBot, DALLE-2, etc. Unique multi-GPU cost-optimised and adjustable configurations are perfect for scaling on-premise or in a Data Center.

AI Inference Servers

| Grando AI Inference Base | Grando AI Inference Pro | Grando AI Inference Max | ||

|---|---|---|---|---|

| GPU | 6x NVIDIA RTX4090 | 6X NVIDIA L40S | 6X NVIDIA A100/H100 | |

| GPU Memory | 6x 24GB GDDR6X | 6X 48GB GDDR6 with ECC | 6X 80GB/94GB HBM2e | |

| CPU | AMD THREADRIPPER PRO 7975WX (32 CORES) | AMD THREADRIPPER PRO 7975WX (32 CORES) | AMD THREADRIPPER PRO 7975WX (32 CORES) | |

| System Power Usage | UP TO 3.6 KW | UP TO 3.0 KW | UP TO 3.0 KW | |

| System Memory | 256 GB DDR5 | 512 GB DDR5 | 1024 GB DDR5 | |

| Networking | DUAL-PORT 10GB, 1GB IPMI | DUAL-PORT 10GB, 1GB IPMI | DUAL-PORT 10GB, 1GB IPMI | |

| Storage OS | DUAL 1.92TB M.2 NVME DRIVE | DUAL 1.92TB M.2 NVME DRIVE | DUAL 1.92TB M.2 NVME DRIVE | |

| Storage Data/Cache | ON REQUEST | ON REQUEST | ON REQUEST | |

| Cooling | CPU & GPU LIQUID COOLING | CPU & GPU LIQUID COOLING | CPU & GPU LIQUID COOLING | |

| System Acoustics | HIGH | HIGH | HIGH | |

| Operating Temperature | UP TO 38ºC | UP TO 38ºC | UP TO 38ºC | |

| Software | UBUNTU / WINDOWS | UBUNTU / WINDOWS | UBUNTU / WINDOWS | |

| Size | 439 x 177 x 681 MM | 439 x 177 x 681 MM | 439 x 177 x 681 MM | |

| Class | SERVER | SERVER | SERVER | |

Deep Learning Product Line

Comino Grando AI Deep Learning workstations are designed for on-premise training and fine-tuning of complex deep learning neural networks with large datasets focusing the field of Generative AI, but not limited by it. They provide top tier and unique multi-GPU configurations to accelerate training and fine-tuning of compute-hungry Diffusion, Multimodal, Computer vision, Large Language (LLM) and other models.

Grando AI DL MAX workstation hosts FOUR liquid-cooled NVIDIA H100 GPUs with 376GB of HBM Memory and 96-core Threadripper PRO CPU running up to 5.1GHz providing up to 50% performance overhead over similar air-cooled solutions. In addition to unexcelled performance Comino solutions come with up to 3-years maintenance-free period, maintenance as easy as air-cooled systems and remote Comion Monitoring System (CMS) ready to be integrated into your software stack via API.

Grando DL workstations are pre-tested to run cuDNN, PyTorch, TensorFlow, Keras, JAX frameworks & libraries and equipped with FOUR NVIDIA GPUs (A100 / H100 / L40S / 4090) paired with the most modern high-frequency multi-core CPUs to provide best in class Machine and Deep Learning performance combined with silent operation even for the most demanding and versatile workflows that include Stable Diffusion, Midjourney, Hugging Face, Character.AI, QuillBot, DALLE-2, etc.

Deep Learning Workstations

| Grando AI Deep Learning Base | Grando AI Deep Learning Pro | Grando AI Deep Learning Max | ||

|---|---|---|---|---|

| GPU | 4x NVIDIA RTX4090 | 4X NVIDIA L40S | 4X NVIDIA A100/H100 | |

| GPU Memory | 4x 24GB GDDR6X | 4X 48GB GDDR6 with ECC | 4X 80GB/94GB HBM2e | |

| CPU | AMD THREADRIPPER PRO 7975WX (32 CORES) | AMD THREADRIPPER PRO 7975WX (32 CORES) | AMD THREADRIPPER PRO 7975WX (32 CORES) | |

| System Power Usage | UP TO 2.6 KW | UP TO 2.2 KW | UP TO 2.2 KW | |

| System Memory | 256 GB DDR5 | 512 GB DDR5 | 1024 GB DDR5 | |

| Networking | DUAL-PORT 10GB, 1GB IPMI | DUAL-PORT 10GB, 1GB IPMI | DUAL-PORT 10GB, 1GB IPMI | |

| Storage OS | DUAL 1.92TB M.2 NVME DRIVE | DUAL 1.92TB M.2 NVME DRIVE | DUAL 1.92TB M.2 NVME DRIVE | |

| Storage Data/Cache | ON REQUEST | ON REQUEST | ON REQUEST | |

| Cooling | CPU & GPU LIQUID COOLING | CPU & GPU LIQUID COOLING | CPU & GPU LIQUID COOLING | |

| System Acoustics | Medium | LOW | LOW | |

| Operating Temperature | UP TO 30ºC | UP TO 30ºC | UP TO 30ºC | |

| Software | UBUNTU / WINDOWS | UBUNTU / WINDOWS | UBUNTU / WINDOWS | |

| Size | 439 x 177 x 681 MM | 439 x 177 x 681 MM | 439 x 177 x 681 MM | |

| Class | WORKSTATION | WORKSTATION | WORKSTATION | |

Features

LIQUID COOLED

LIQUID COOLED

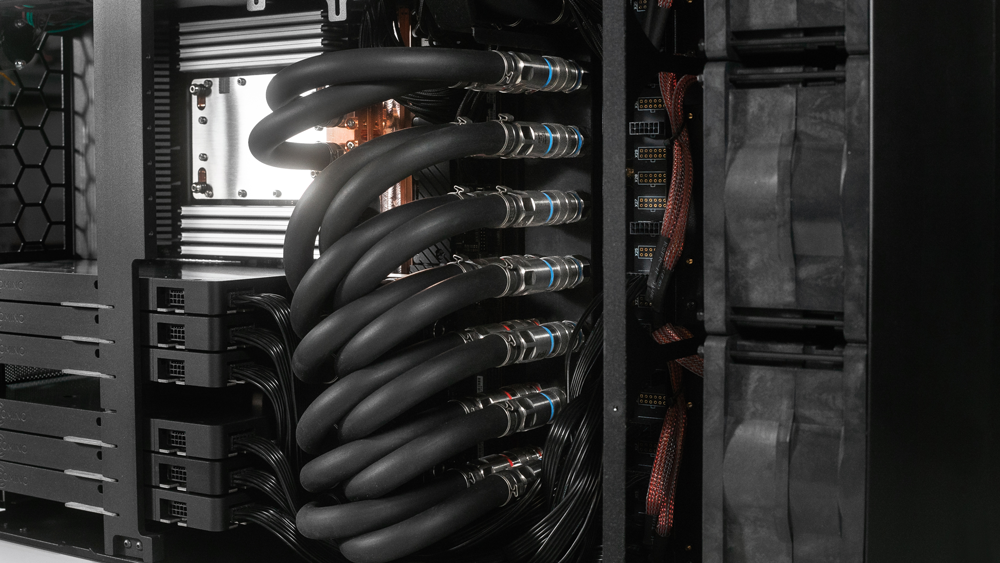

Comino liquid cooling system unleashes the full performance potential of modern top-tier GPUs and CPUs, allows to prolong lifespan of the hardware and ensures 24/7 operation even in harsh environment with no thermal throttling.

QUICK-DISCONNECT COUPLINGS

maintaining and reduce maintenance time to increase system availability.

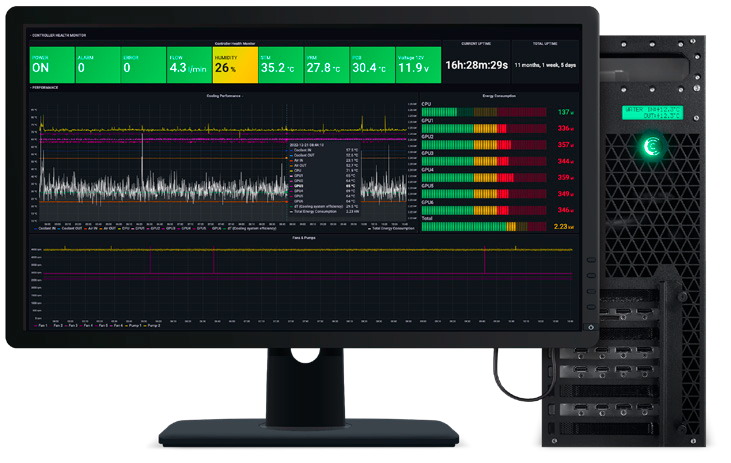

REMOTE MANAGMENT

BMC chip to provide intelligence for its IPMI architecture for out-of-band management to enhance hardware-level control for improved IT efficiency.

COMINO’S MONITORING SYSTEM

allows to collect cooling system log statistic. WEB based GUI allows to inspect several devices remotely. The monitoring system increases system availability.

REDUNDANT POWER SUPPLY (CRPS)

Designed for use in critical IT infrastructure. It provides reliable power for your system without limitation. PSU work at whole spectrum voltage 100-240VAC and 240VDC and provide N+M redundancy

Tailor-made configurations for your needs

We configure Comino servers and workstation according to your specific use case. Please find enclosed example configuration of Comino servers and workstation above.

For further information please contact the Zstor Sales Team via e-mail: